The 3 layered architecture as most of us know it is dead. Killed by the cloud

When I started programming for a pay-check, when we still had just ASP and VB6, we were tought the good old pattern of three layers. One would handle a request through some UI layer, be it a desktop UI or a Webpage. We would then use some sort of business layer before hitting the database. Our architecture was quite clean, with our business logic contained in a dll, some data kept in memory and then we had the database accessed through an ISAPI extension in IIS.

This was not a bad setup, and it worked until a certain point. Our codebase grew and sure enough we ended up with complex code due to missing separation of concerns, classes doing just too much and virtually no tests. Later on in the early era of .Net 1 (since beta) we experienced scaling problems when we tried to move the same architecture to a larg B2B website. Not really a surprise.

Architecture such as this is still seen in production, and as late as this summer I was on a project following the same general pattern. UI, Business logic and Database. Internally the project was/is a huge success, the developers worked hard to deliver business value but slowly facing the same challenges. I was a real pain after working on projects with messaging, event sourcing, "micro" services and bounded context curated with care.

Whats wrong?

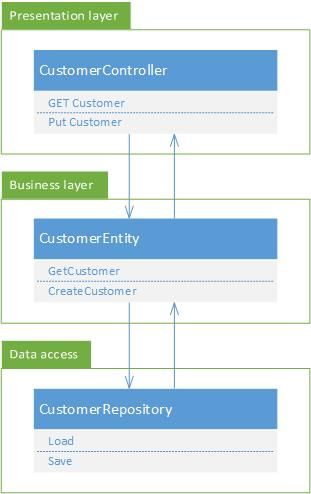

As one can see from the diagram above, a call traverse down through all three layers and to return data up again on the same path. This does not scale. It is extremly hard to follow the SOLID principles and you are bound to fail on bounded context (phun intended). One of the first things that happens is that the business model grows. Your customer entity is used to do CRUD uperations on a customer. All fine. We add some business rule to the class. But then we need a customer object for invoicing. Ahh, let's re-use. Add more business rules. Suddenly your customer object is no longer just a customer. We then need to handle huge amounts of requests. We try to scale out and produce more CPU heat, but now our queries to the database are expanding, since we really needed invoice data with that customer on the invoice page. Let's add caching.

Uh-oh, we need cache invalidation accross multiple machines.

Next up we need to handle gazillions of requests per second and our only way to scale is more CPU, more machines, bigger database servers.

Divide and conquer

Polygot programming, CQ(R)S, queues, nosql and sql, event sourcing and cloud services to the resque

You've probably heard some of these termes, perhaps even tried one or two. The good news is that with full stack cloud services, such as Amazon and Azure, the cost and availability for combining such services is really low. This is especially important when we talk about operations, hardware, maintenance and backup.

Need to handle loads of traffic? Fine, not a problem. Let's post to a high capacity message bus, like Azure Event hubs. Aggregate and stuff the data in storage tables or in memory with redis. Store some of it in a mySql database. But what about accessing? Not a problem, we only select from pre-processed data for our specific view. Using key/value we can easily store this in either sql or a DocumentDB. But pre-processing? Yep, let's scale that from our storage tables or redis instances using worker roles. Now, voila. Problem solved[1].

The ease and availability of cloud services where management is limited, automation is built in makes such scenarios compelling and cost effective. Moving away from the old up-and-down three-layered architecture will prepare you for taking your solutions to new limits and possibilties.

[1]

If your architecture is broken or just not there it will take time. It's easy to get lost, over-use something which fits one use case or just make it too complicated. However, there are techniques and patterns out there to help, such as strangling, isolation and automated tests.